How many Buckets?

This Blog was originally posted by David Maier here

In Couchbase the equivalent of a database is called a bucket. A bucket is basically a data container which is split into 1024 partitions, the so called vBuckets. Each partition is stored as a Couchstore file on disk and the partitioning is also reflected in memory. Each configured replica leads to additional 1024 replica vBuckets. So if you have a bucket with 1 replica configured then this bucket has 1024 active vBuckets and 1024 replica vBuckets. The vBuckets are spread across the cluster nodes. So in a 2 node cluster each node would have 512 active vBuckets. In a n-node cluster each node would by default have nearly 1024/n vBuckets.

An often asked question is ‘How many Buckets should I create?’.

There are multiple aspects to take into account here.

- Max. possible number of Buckets

- Logical data separation

- Load separation

- Physical data separation

- Multi-tenancy

Max. possible number of Buckets

Before we start talking about why it might make sense to use multiple buckets, let’s talk about how many buckets are possible in practice per cluster.

As we will see a bucket is not just a logical container but also a physical one. Furthermore some cluster management is happening per bucket. So it would not be a good idea to create hundreds of buckets because it would lead to a management overhead. The actual max. number is dependent on size, workload and so on. Couchbase is usually recommending a max. number of 10 buckets.

Logical Data Separation

Let’s assume that you have multiple services. Regarding a service oriented approach (and especially micro services) those service would be decoupled by having disjunct responsibilities. An example would be:

- Session Management Service: Create/validate session tokens for authorization purposes

- Chat Service: Send/retrieve messages from/to users

The session management service is then indeed used by the chat service but both services have disjunct responsibilities.

A logical data separation would mean that we create 2 buckets in order to make sure that there is a logical separation between sessions and messages. So you can co-locate some service by taking the scalability requirements into account. If these requirements are completely different then it would make sense to open a new cluster.

Load Separation

It’s indeed the case that the workload pattern of the session management service is different from the chat service. Millions of users sending/reading messages would cause a lot of writes and reads whereby the token based authorization would cause mostly reads and a fewer number of writes for the log-ins.

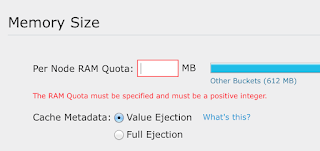

So it would be useful to create the session management bucket with other settings than the messaging one. For a session management use case it makes sense to have all of the sessions cached in order to reply quite quick to the question if a user is authorized to act in the context of a specific session.

So the statement here is that it makes sense to have multiple buckets (or even multiple clusters) to support the individual load patterns better. Buckets with a high read throughput profit from higher memory quota settings.

Buckets with higher disk write throughput benefit from a higher disk I/O thread priority.

Another aspect is load separation for indexing purposes. In 3.x this refers to the View indexing load. Couchbase Views are defined per bucket (one bucket can have multiple Design Documents, one Design Document contains multiple Views) and by using map-reduce functions. Views are sharded across the cluster. So each node is responsible for indexing its data. They can have multiple roles:

- Primary Index: Only emit a key of a KV-pair as the key into the View index

- Secondary Index: Emit a property of the value of a KV-pair as the key into the View index

- Materialized View: Emit a key and a value into the View index

Views are using incremental Map-Reduce to be updated. This means that a creation/update/deletion of a document will be reflected in the View index. Let’s assume that you have 20% of user documents in your bucket. Your Views are defined in a way that they only work with user documents and so your map function is filtering them out. In this case the map function would be anyway executed for all documents by skipping 80% of them after checking if they have a type attribute which has the value ‘User’. This is means some overhead. So for Views it would be a good idea to move these 20% of data into another bucket called ‘users’. The Views are then only created for the ‘users’ bucket.

In Couchbase Server 4.0, G(lobal) S(econdary) I(ndexes) are helping. As the name says a GSI is global and so it sits on a single server (or on multiple nodes for failover purposes). They are not sharded across multiple nodes. So the indexing node gets all the mutations streamed and then has to index also based on filter criteria, but the indexing load is separated from the data serving node and so does not affect it.

CREATE INDEX def_name_type ON travel-sample(`name`) WHERE(`_type` = “User”)

So you win control regarding the indexing load separation by deciding which node serves which index.

Physical Data Separation

The previously mentioned load separation is closely related to a physical separation.

To store several GSI-s on several machines means to separate them physically. So Couchbase Server 4.0 and it’s multi-dimensional scalability feature, whereby the dimensions are

- Data Serving

- Indexing

- Querying

allows you to physically scale-up per individual dimension. A node wich runs the query service can now have more CPU-s whereby a node which runs the data service can now have more RAM in order to support lighting fast reads.

A physical data separation is also possible per bucket. Couchbase allows you to specify separated data and index directories. So it is already possible to keep the GSI-s or Views on faster SSD-s whereby (indeed dependent on performance requirements) your data can be stored on bigger slower disks. Each bucket is reflected as one folder on disk. So it’s also possible to mount a specific bucket folders to a specific disks, which provides you a physical per bucket data separation.

Multi-tenancy

The multiple buckets question is also often asked in a context of multi-tenancy. So what if my service/application has to support multiple tenants? Should then every tenant have his own bucket?

Let’s answer this question by asking another one. Let’s assume you still use a relational Database System. Would you create one database for every tenant? The answer is most often ‘No’ because the service/application is modeling the tenancy. So it would be more likely the case that there is a tenants table which is referenced by a user table (multiple users belong to one tenant). I saw only a very few Enterprise Applications in the wild which relied on features (like database side access control) of the underlying Database System for this purpose.

In Couchbase you have in theory the following ways to realize multi-tenancy:

- One tenant per cluster: This would be suitable if you provide Couchbase as a Service. Then your customers have full access to Couchbase and they implement their own services/applications on top of it. In this case a cluster per tenant makes sense because the cluster needs to be scaled out dependent on the performance requirements of your customer’s services/applications.

- One tenant per bucket: As explained, there are two reasons why it is often not an option. The first one is the answer to the question above. The second one is the max. number of buckets per cluster.

- One tenant per name space: As explained your application often already models multi-tenancy. So you can model a kind of name space per tenant by using key prefixes. ( Each document is stored as a KV-Pair in Couchbase. ) So every key which belongs to a specific tenant id belongs to a specific tenant. If an user with an email address of ‘something@nosqlgeek.org’ would log in, then he would only have access to documents those have the prefix ‘nosqkgeek.org’. The key pattern for a message would be ‘$tenant::$type::$id’, so for instance ‘nosqlgeek.org::msg::234234234’.

Conclusion

Multiple buckets are making sense for Logical Data separation in a context of service oriented approaches. If the max. number of buckets per cluster would be exceeded or the scalability requirements of the buckets are too different then an additional cluster makes sense. An important reason for multiple buckets in 3.x is the load or physical data separation. 4.0 helps you to externalize the indexing load to other nodes which gives you more control regarding the balancing of the indexing load. Multi-tenancy should happen normally via name spaces (key prefixes) and not via multiple buckets.